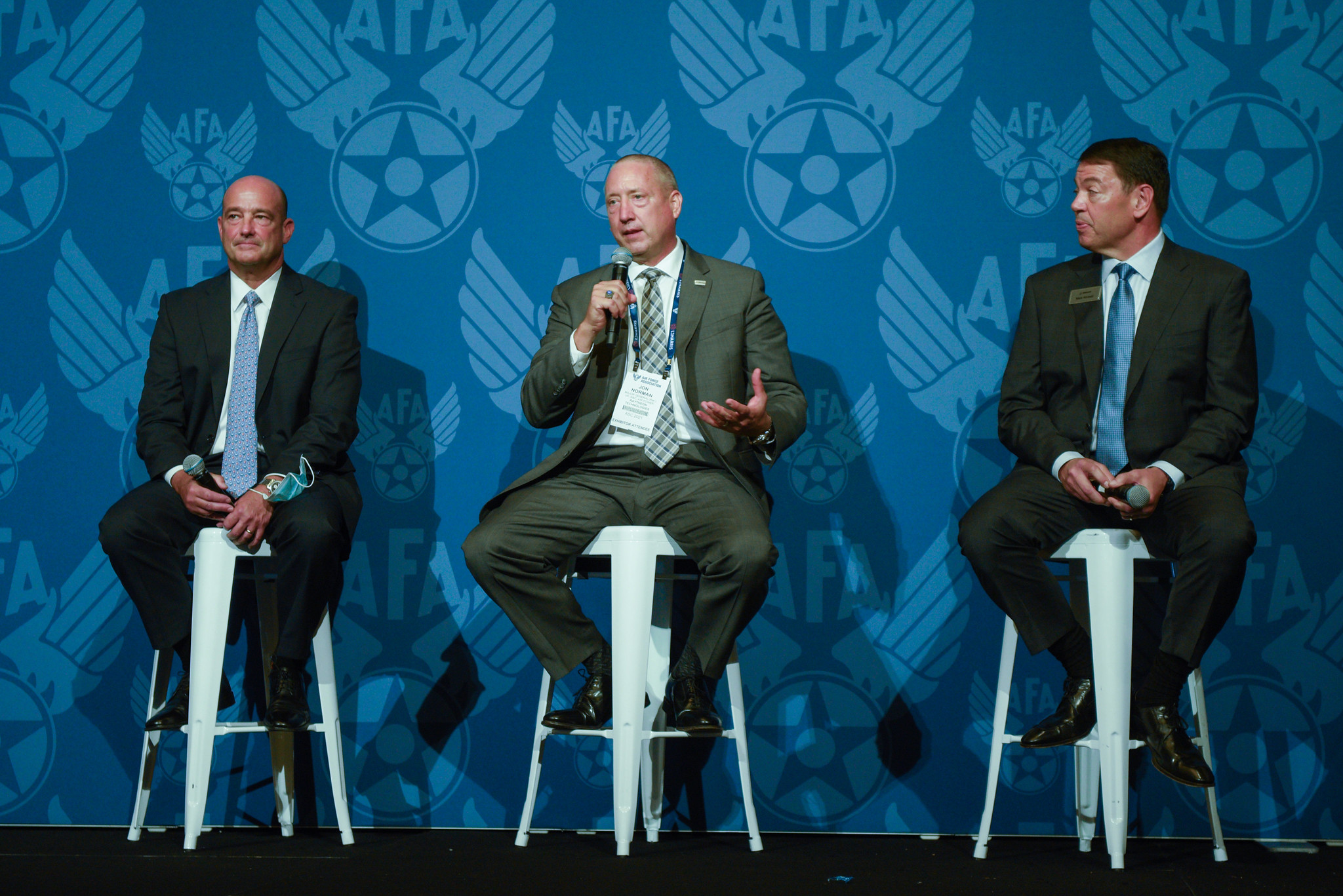

Secretary of the Air Force Frank Kendall alluded to an event in which artificial intelligence helped to identify a target or targets in “a live operational kill chain” in his remarks at the Air Force Association’s Air, Space & Cyber Conference in National Harbor, Md., on Sept. 20.

Kendall offered the description as an example of his “No. 1 priority,“ which he said is investing in “meaningful military capabilities that project power and hold targets at risk anywhere in the world.”

Kendall said that in 2021, the Air Force’s chief architect’s office “deployed AI algorithms for the first time to a live operational kill chain” involving the Air Force’s multi-site Distributed Common Ground System and an air operations center “for automated target recognition.”

He said the event represented “moving from experimentation to real military capability in the hands of operational warfighters.”

Kendall did not provide details of the mission but said the broad intent of the new capability is to “significantly reduce the manpower-intensive tasks of manually identifying targets—shortening the kill chain and accelerating the speed of decision-making.”

Air Force spokesperson Jacob N. Bailey told Air Force Magazine in an email, “These AI algorithms were employed in operational intelligence toolchains, meaning integrated into the real-time operational intel production pipeline to assist intelligence professionals in the mission to provide more timely intelligence. The algorithms are available at any [DCGS site] and via the [DCGS] to any [air operations center] whenever needed, so they’re not confined to a particular location.”

The Defense Department has acknowledged further need to gather intelligence from afar as well—in addition to holding targets at risk—in pursuing its “over-the-horizon” strategy of monitoring Afghanistan for terrorist activities. Kendall said the evacuation from Kabul included “continuous surveillance from space and the air.”

In July, Secretary of Defense Lloyd J. Austin III said DOD had “significantly” stepped up its number of AI efforts over the prior year. The department preceded that acceleration by adopting five “ethical principles” for AI development and use.