The Space Force’s chief technology and innovation officer has an idea for how not to let the military’s artificial intelligence get out of hand.

Lisa Costa’s office focuses on “asymmetric, disruptive technology,” she told members of the press at the Space Symposium in Colorado Springs, Colo., in April. “We’re really looking at leap-ahead capability as opposed to just keeping up.”

The office’s role isn’t to sustain anything, Costa said, but instead to “come up with some of the innovative ideas, get them started.”

Costa predicted that artificial intelligence and machine learning (AI/ML) will become “critically important,” though conceded that so far, “a lot of people talk about it—but have not necessarily implemented it—for space.”

Yet she imagined that the combined experience of the world’s space companies and military space organizations gathered for the expo would ultimately inform AI algorithms.

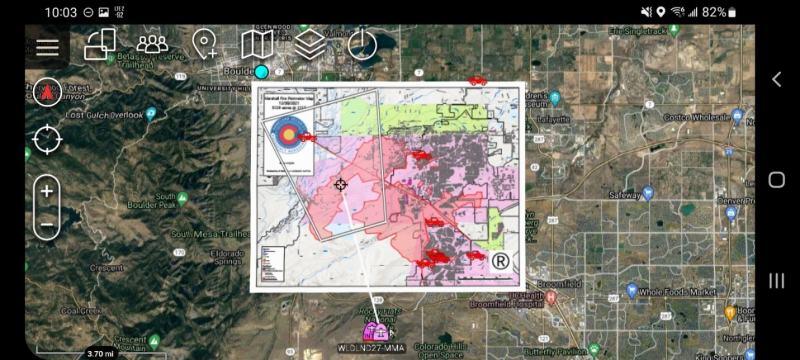

Consider the facts that the Space Force is the smallest service but has the largest physical domain, Costa said.

“We have to rely on machine learning and AI to be able to focus the attention of our Guardians on the things that are the most important, right? What is normal?” she said, referring to the practice of space domain awareness. “What is behaving as, you know, not expected?”

Assuming that some degree of future military AI was a given, NATO’s Assistant Secretary General for Emerging Security Challenges said upon the publication of NATO’s AI strategy that the allies need to be “early to the party” to “bridge a gap of mistrust” in AI.

“In a situation where regulation comes after the broad misuse of technology,” Van Weel said NATO members need to “make sure that we understand new technologies—not to militarize them, no—but to understand the security and defense implications.”

In an emailed response to questions, Brig. Gen. John M. Olson, the Department of the Air Force’s chief data and AI officer, told Air Force Magazine the department is “carefully assessing areas where we can drive the greatest operational effectiveness or return on investment” in AI.

Olson described how AI could contribute to a resilient space architecture, the No. 1 “operational imperative” of Air Force Secretary Frank Kendall.

“The system of systems, nodes, and elements within an architecture can benefit from the enhanced situational awareness that drives more informed, accurate, and appropriately-optimized responses, plus increased adaptability and improved learning,” Olson said.

“For the Air Force and Space Force in the near-term, our goal is to defend what we have and continue to rapidly evolve and transition to resilient, hybrid, and AI/ML-enhanced system architectures.”

Costa said securing commercially developed AI “would be a great problem to have,” but she also perceives AI’s vulnerabilities and has an idea for how the government could proceed safely.

“I fundamentally believe that we need to protect certain algorithms,” Costa said. “And that starts before acquisition.

Code development environments can be instrumented, Costa said, “so that you know what’s being coded.”

“And when we have vendors that are bidding, we need to have language that says, ‘You will use this DevSecOps environment as a server. We will provide your company with the log-on credentials. You will log on, and you will build those algorithms on our networks.’”